The death and life of great online cities

Note: this is the second part of a series on moderation in online and decentralised communities. Previous part(s) here: I

Last week (shh, yes last week) we were talking about how the only long-term viable mode of operation for an online community of sufficient size is one that of a sovereign state where the users are citizens who consent to the actions of that state. This meant citizens withholding consent without being kicked out would be able to control how the platform works. This model we propose offers the user-citizens rights and responsibilities, such as habeas corpus (you cannot be banned off your Gmail, Facebook account for no reason), right to a trial (the process that bans you is a court of law, open to the public), to an appeal, with the ultimate goal being that the tech that we have come to depend on so much for our lives, tech that profits off of our use, does treat us like human beings in a civilised jurisdiction.

As to why this matters to us (us being Aether), a peer-to-peer (P2P), decentralised public space, we do need the consent of the users more than anyone because, in our system, users are the platform, not visitors to Disneyland who passively watch the exhibits, or customers to be advertised at, since the content on Aether’s P2P network is distributed via users’ very computers. If we don’t have the consent of our users, we have no network.

This week, I’d like to get a little more practical and attempt to address a few of the criticisms pointed at the argument that online communities should more resemble actual, real-world jurisdictions.

Arguments against

There are three common arguments against online communities behaving as if they were states, outlined below. We’ll take them one by one.

- No stake. People don’t have enough at stake to make the system work (the weight of the system is more than the benefit it accrues).

- No teeth. The rule-breakers can’t be enforced against because real-world jurisdictions can punish and online ones cannot, thus any online system will be overwhelmed by rule-breakers.

- No incentive for the overlord. The companies that provide the public spaces own them and it is not in their best interest to offer these rights to their users.

‘People don’t care enough to make it work’

This argument against having a state-like jurisdiction in a public space on the Internet is that of, essentially, people not caring enough to make the machinations of a system like this be functional. For example, a system that makes moderators accountable to the people in the platform by making moderation logs public, and mods be able to be removed, only works if people care enough to use it. If no one uses it, that feature is a pure negative in terms of complexity and bug surface area, and it will make the platform objectively worse for having it. By implementing features that people aren’t asking for, you’re both making your product heavier, more bug-prone, and lose precious development time — time that could be better spent on features people actually do want.

This is a fair argument, but it does depend on exactly what specific group of people you’re talking about. As of early 2021, when this was written, it’s become more and more visible that the tech companies who own the most popular platforms act in ways that don’t acknowledge the disruption that banning an account (like Gmail, where your main mail account probably resides) causes on one’s life, as exemplified by currently ongoing news. It’d likely be fair to say that the risk of being randomly ‘killed off’ online is acknowledged by the users today, in that people now understand how valuable those accounts are to them. So from the point of view of understanding the importance, I think people do, so the ‘people just don’t care enough’ aspect of the ‘no stake’ argument is no longer true.

Exhibit 1a: People with better things to do than armchair legislating

(Hendrick Avercamp, Winter Landscape with Skaters, 1608)

However, there isn’t as of yet demand from mainstream internet users for a solution to this problem. This lack of demand, I think, has several aspects to it. The most obvious one is that users of a community are isolated from one another by the virtue of it being online, and if one of them gets ‘disappeared’ into the night by the platform, it’s not obvious to the others that the user was removed, as he or she might have just lost interest. On the contrary, in a real-world community, somebody vanishing into thin air is a lot more visible, and a lot more likely to trigger questions. Another component is that some significant amount of users live in jurisdictions where rule of law is not as strong, and to them, online platforms treating all of us as serfs is in fact how the world works for them in their offline lives as well. In essence, we should realise that the ability to care for this is an immense privilege, and we should not expect everyone to spend time thinking on this yet — there are a lot of people that are working on the lower steps of the societal Maslow’s Triangle on this planet.

I think this makes sense. The space of mind that we have that allows us to care about these things is indeed a privilege. However, the fact that only a subset of people is capable of caring about not being treated awfully by giant corporations does not make it any less important. If anything, as technologists, we owe it to the mainstream public that the world we build, and worlds we invite them into are hospitable, dignified and just. The best way to do that is to be there before them to build it right.

‘Barbarians at the gates: they have swords, you have pool noodles’

This argument is one of defensibility. All large communities that govern themselves are states of some sort. A state has real-world power to exclude or imprison people for not following the mores of the society it encompasses. The ability to apply force (of any sort) is essential to the concept of the state because that is, ultimately, what a state is: a group of people who have agreed to pool their individual agency into an entity bigger than themselves, so that their collective power is greater than any of them can exert alone, and ideally, greater than the sum of its parts. Fundamental to the existence of an ancient Greek city-state, was the ability to ostracise: a vote cast by its citizens, that, if successful, results in the banishment of someone from that city and anywhere the city governs in perpetuity. In ancient Greece at least, trying to come back from ostracism generally ended up badly.

Online communities, on the other hand, usually do not have this power. They are collections of people from a variety of jurisdictions around the world, and they cannot ultimately be held to account in a way that is more forceful than being temporarily removed from that community. The exclusion isn’t even permanent: there is no good way to identify someone on the internet consistently: someone could just come back under a different nickname and no one would be the wiser. Unless you tie the account on your system to a real-world identity like an actual ID card, it’s simply not possible to banish someone permanently for not playing ball, or for even actively trying to destroy your community. Even then, even real states have trouble with fake ID cards, so the best you can get is about as good as a real state… that is to say, not good, at this.

So if even the real governments can’t get a good handle on this problem, how can we believe a virtual city would?

Should've spent a little less on the hat, a little more on the armour

(TV Series still, Barbarians, Netflix, 2020)

Well, there are a few things here. First of all, yes, it being a virtual community does make keeping out the bad people (according to the in-community) harder. Yes, it is possible for an assailant to create many, many accounts for spam, or just create a few to harass a certain few people that he does not like. But on the other hand, the community being online also allows for a few things that would not be possible in real life, like things that are too hard to track in real life within the limitations of human wetware becomes possible to track. For example, it would be very hard to keep track of who invited what on the scale of millions in a real-world community, but it is not only possible but very easy in a virtual one with the aid of software. And with this, we can create a stake. If one of the people you happened to invite ends up a spammer, you lose your invite privileges. If two are such, then you lose your account. If three are such, everyone who you ever invited loses their accounts. If four or more are such, everyone the person who invited you also invited, alongside your ‘benefactor’, loses their accounts. Cruel? Certainly so — but cruelty is only possible with power. If you thought this was cruel, you concede that it is possible to exercise power even if the community is virtual, without the need for any sort of a real-world ID check.

All that to say, barbarians are certainly at the gates, but we have many ways to fight them off. Being online means no physical violence, they can’t force their way in! We can charge them entry, one-time or recurring, we can make them dance to the tune, and if they don’t, we’ve already recovered the cost of moderation from them: every user coming in pays what they will cost to moderate for a year, ahead of time. We can make them be allowed to come in only if tethered by rope to a benefactor, and if someone makes trouble, we pull the rope and the whole of the corrupt lineage gets dragged into the sunlight — and then we vote for ostracism. The possibilities are endless here: the point is that we have the power to set the rules. Yes, nothing can be enforced in the physical world, but every adult knows that the cruellest of punishments can be dealt by a person speaking quietly, just in the social sphere. Human creativity is still sharper than most stabby things we’ve come up with so far, and that’s for no lack of trying on the latter either.

‘Why would anyone with a popular app ever implement this? What’s in it for them?’

So, this argument questions why would ever, say, Facebook, implement this. This is true, the existing cadre of good boys do have quite a lot invested in the current status quo of your indentured servitude to them, as any rational business would. So why would they, indeed? Why would they move to a business model that shares power with you, the user, over the existing one in which you get nothing? What rational incentive would they have to even consider such a thing?

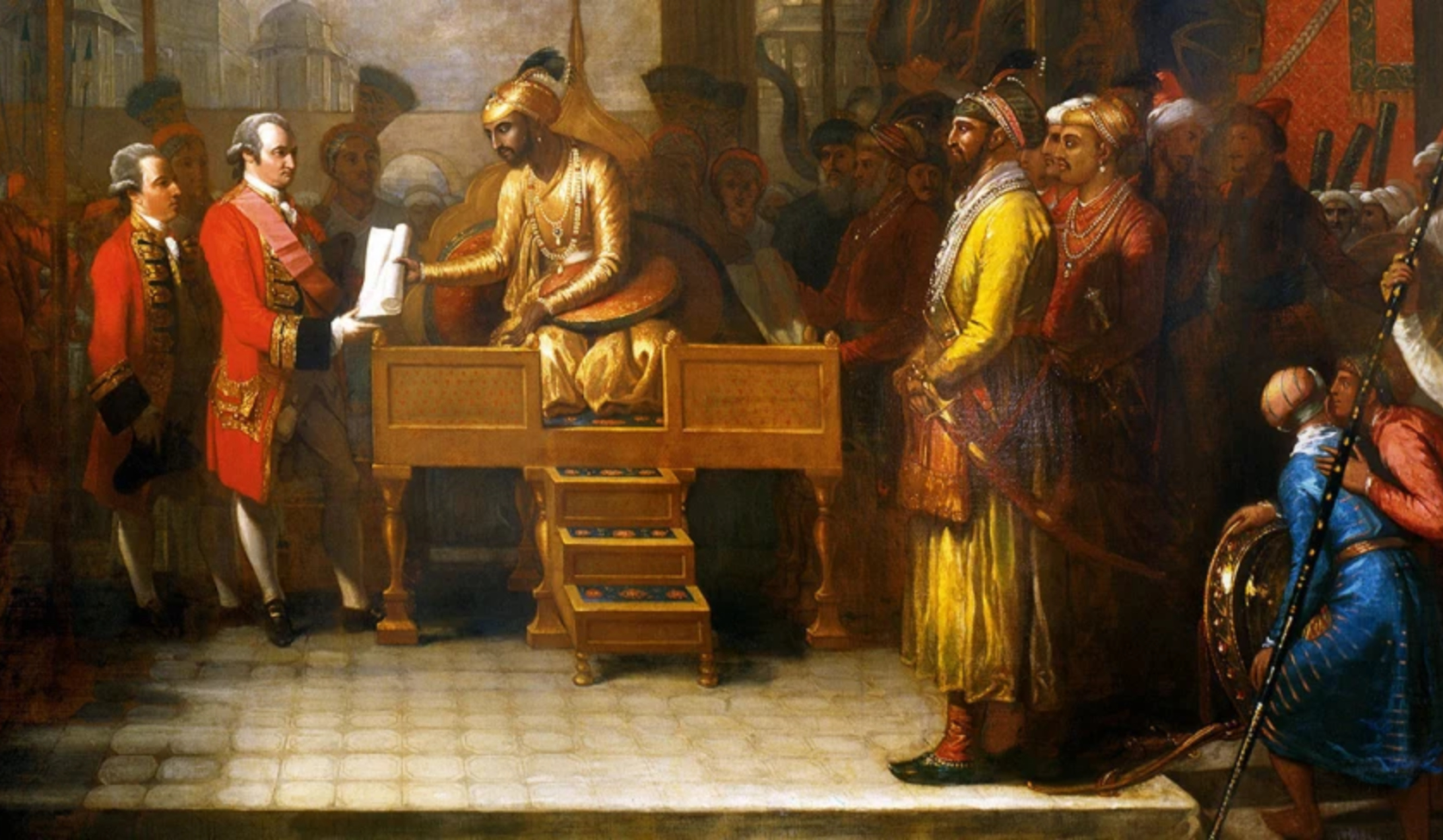

Merchants of The East India Company signing off yet another Very Equitable Trade Deal

(Benjamin West, Shah Alam conveying the grant of the Diwani to Lord Clive, 1765)

This is a little more nuanced than the others, so I want to give this argument a little more weight. I can enumerate the wrong answer outright though: it’s not the ‘invisible hand’ that would move them, capitalism only works like that in theory. In real life, there are a lot of moats around existing businesses that defend them, and contrary to the argument that says ‘if you build a better mousetrap, the world will pave a path to your door’, your mousetrap has to be actually, significantly better: it has to not only overcome the existing best solution but also the effort, so to say, pave the path.

So — is the decentralised, participatory web going to be lucrative any time soon? No, at least not for companies organised in the current ways of doing things. So no invisible hand for you.

We can approach it from the other end though: is the experience of the user any better, for the ordinary user who might never even participate in any sort of election whatsoever?

I believe, yes. This I think is a better solution to even the completely passive reader, because what it gives that no other system can give is a guarantee that what you are seeing people discuss has some semblance to reality in terms of what people are thinking right now, simply because there is nothing between you and that discussion you’re seeing.

This is a point that is often trivialised — it’s common to say that the people who care about censorship are either a) lunatics, or b) hiding something, considering that our society has achieved perfect free speech of the approved opinions. This does have some truth to it: some of the people decrying lack of free speech do sound a lot like lunatics (or worse). But the thing to realise is that outright censorship is not the only dampener on a community. When this kind of censorship happens it’s obvious, so not much to talk about here. The other kinds of speech suppression are much more interesting. One, in particular, applies to political speech; which, given today’s polarised environment, is most speech. This trick is pressuring the company that is the conduit: the company owning the network you’re in might not be willing to suppress anything, but political pressure can shift the Overton window of the company, and thus force it to change with it. This is especially insidious because changing the Overton window to force the company usually has the effect of pushing the people to follow along as well, without them realising, by making what is acceptable to say in public space and what is acceptable to say in that company’s space different. From the point of the user without a clue as to what is happening, it feels like their online friends are all much more fervent supporters of their political creeds than it looks like in meatspace because an attack on the Overton window of the company makes it go out of sync with the aforementioned window in real life, so what is acceptable speech in the street face to face and what is such in the company-owned online community drift very far apart.

While this is interesting, the reason this is meaningful for our point is that there is nothing the company can do here, however good-natured and however willing they can be. The problem is not something they can do anything about, because it is their own existence: only by removing the company, we can remove the attack surface area that is the Overton window of the intermediary entity. A self-governing online space that relies on its citizens to make decisions is the only way you can be guaranteed that there will be no one that can be attacked to change what you can see or talk about: there is only one Overton window, the community’s.

So, considering that, my point proves the exact opposite: not only a company that has a popular social network has no reason to move to this model, it also has every incentive to avoid it, furthermore actively try to kill it before it takes root.

What do we do here then? I see three ways.

One of them is that these companies get legislated or strong-armed into this model. Legislation is simple, strong-arming can take many forms. For example, removing Section 230 protections for social networks means that the social network is now directly responsible for the content on its platform — which results in a sharply constrained range of speech that is acceptable, which is the content the company is astronomically unlikely to get sued about (i.e. cat pictures). This is fun for a few days, but it means a social network who doesn’t allow people to be social. So either the company moves to a peer-to-peer model where the users make the moderation decisions, thus the company is not liable, or the company gets either legislated out of existence directly or loses popularity due to the aforementioned sharply constrained speech, with its former audience moving to other platforms, which, given the legal situation involved, would likely be decentralised ones.

Another way takes a much more optimistic view of human nature. This presupposes that people essentially wake up to the idea that, maybe, having their entire social life under the control of one benevolent dictator is not a good idea, exactly for the reasons why a benevolent dictatorship in real life is not a good idea. This would eventually trigger a move to the newer, more humane platforms.

The third and last way is more macabre. It’s often said that science advances one funeral at a time: people never get convinced of a competing theory, but they die, and eventually, there’s no one left believing the old, outdated theory. A new generation comes in, and when they have to sign up for a social network the choice is starkly obvious to them in a way that is not, and perhaps will not ever be obvious to us today, the generation reading this.

Either way, history marches forward.

Next week

The first week we had talked about why a government is needed for any online community today, and this week we talked about the common arguments against, and what we think about them. In the next two weeks, we’ll be talking about a practical, real-world implementation of a system that satisfies these constraints that we’re building.

See you then!